Langfuse

🪢 Open source LLM engineering platform. Observability, metrics, evals, prompt management, testing, prompt playground, datasets, LLM evaluations -- 🍊YC W23 🤖 integrate via Typescript, Python / Decor…

Observability

Langfuse uses Github Discussions for Support and Feature Requests.

We're hiring. Join us in product engineering and technical go-to-market roles.

Langfuse is an open source LLM engineering platform. It helps teams collaboratively develop, monitor, evaluate, and debug AI applications. Langfuse can be self-hosted in minutes and is battle-tested.

✨ Core Features

-

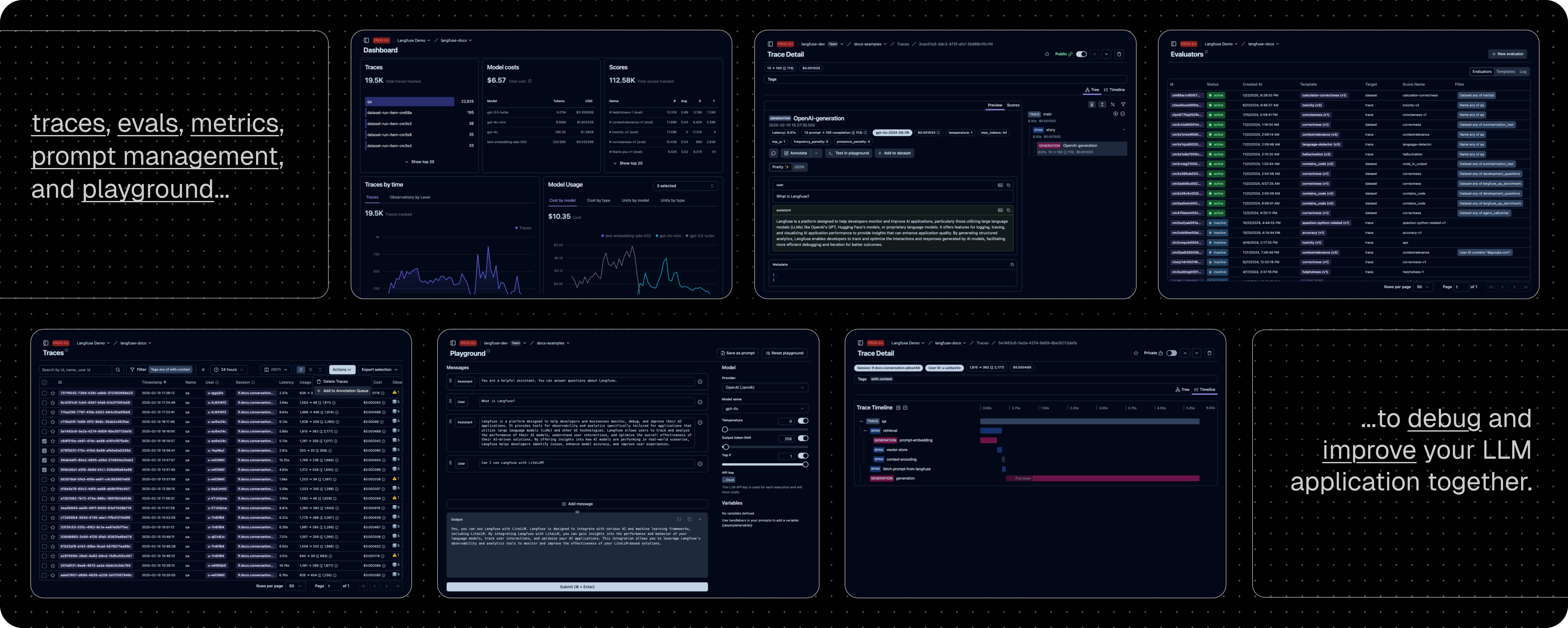

LLM Application Observability: Instrument your app and start ingesting traces to Langfuse, thereby tracking LLM calls and other relevant logic in your app such as retrieval, embedding, or agent actions. Inspect and debug complex logs and user sessions. Try the interactive demo to see this in action.

-

Prompt Management helps you centrally manage, version control, and collaboratively iterate on your prompts. Thanks to strong caching on server and client side, you can iterate on prompts without adding latency to your application.

-

Evaluations are key to the LLM application development workflow, and Langfuse adapts to your needs. It supports LLM-as-a-judge, user feedback collection, manual labeling, and custom evaluation pipelines via APIs/SDKs.

-

Datasets enable test sets and benchmarks for evaluating your LLM application. They support continuous improvement, pre-deployment testing, structured experiments, flexible evaluation, and seamless integration with frameworks like LangChain and LlamaIndex.

-

LLM Playground is a tool for testing and iterating on your prompts and model configurations, shortening the feedback loop and accelerating development. When you see a bad result in tracing, you can directly jump to the playground to iterate on it.

-

Comprehensive API: Langfuse is frequently used to power bespoke LLMOps workflows while using the building blocks provided by Langfuse via the API. OpenAPI spec, Postman collection, and typed SDKs for Python, JS/TS are available.

📦 Deploy Langfuse

Langfuse Cloud

Managed deployment by the Langfuse team, generous free-tier (hobby plan), no credit card required.

Self-Host Langfuse

Run Langfuse on your own infrastructure:

-

Local (docker compose): Run Langfuse on your own machine in 5 minutes using Docker Compose.

# Get a copy of the latest Langfuse repository git clone https://github.com/langfuse/langfuse.git cd langfuse # Run the langfuse docker compose docker compose up -

Kubernetes (Helm): Run Langfuse on a Kubernetes cluster using Helm. This is the preferred production deployment.

-

VM: Run Langfuse on a single Virtual Machine using Docker Compose.

-

Planned: Cloud-specific deployment guides, please upvote and comment on the following threads: AWS, Google Cloud, Azure.

See self-hosting documentation to learn more about the architecture and configuration options.

🔌 Integrations

Main Integrations:

| Integration | Supports | Description |

|---|---|---|

| SDK | Python, JS/TS | Manual instrumentation using the SDKs for full flexibility. |

| OpenAI | Python, JS/TS | Automated instrumentation using drop-in replacement of OpenAI SDK. |

| Langchain | Python, JS/TS | Automated instrumentation by passing callback handler to Langchain application. |

| LlamaIndex | Python | Automated instrumentation via LlamaIndex callback system. |

| Haystack | Python | Automated instrumentation via Haystack content tracing system. |

| LiteLLM | Python, JS/TS (proxy only) | Use any LLM as a drop in replacement for GPT. Use Azure, OpenAI, Cohere, Anthropic, Ollama, VLLM, Sagemaker, HuggingFace, Replicate (100+ LLMs). |

| Vercel AI SDK | JS/TS | TypeScript toolkit designed to help developers build AI-powered applications with React, Next.js, Vue, Svelte, Node.js. |

| API | Directly call the public API. OpenAPI spec available. |

Packages integrated with Langfuse:

| Name | Type | Description |

|---|---|---|

| Instructor | Library | Library to get structured LLM outputs (JSON, Pydantic) |

| DSPy | Library | Framework that systematically optimizes language model prompts and weights |

| Mirascope | Library | Python toolkit for building LLM applications. |

| Ollama | Model (local) | Easily run open source LLMs on your own machine. |

| Amazon Bedrock | Model | Run foundation and fine-tuned models on AWS. |

| AutoGen | Agent Framework | Open source LLM platform for building distributed agents. |

| Flowise | Chat/Agent UI | JS/TS no-code builder for customized LLM flows. |

| Langflow | Chat/Agent UI | Python-based UI for LangChain, designed with react-flow to provide an effortless way to experiment and prototype flows. |

| Dify | Chat/Agent UI | Open source LLM app development platform with no-code builder. |

| OpenWebUI | Chat/Agent UI | Self-hosted LLM Chat web ui supporting various LLM runners including self-hosted and local models. |

| Promptfoo | Tool | Open source LLM testing platform. |

| LobeChat | Chat/Agent UI | Open source chatbot platform. |

| Vapi | Platform | Open source voice AI platform. |

| Inferable | Agents | Open source LLM platform for building distributed agents. |

| Gradio | Chat/Agent UI | Open source Python library to build web interfaces like Chat UI. |

| Goose | Agents | Open source LLM platform for building distributed agents. |

| smolagents | Agents | Open source AI agents framework. |

| CrewAI | Agents | Multi agent framework for agent collaboration and tool use. |

🚀 Quickstart

Instrument your app and start ingesting traces to Langfuse, thereby tracking LLM calls and other relevant logic in your app such as retrieval, embedding, or agent actions. Inspect and debug complex logs and user sessions.

1️⃣ Create new project

- Create Langfuse account or self-host

- Create a new project

- Create new API credentials in the project settings

2️⃣ Log your first LLM call

The @observe() decorator makes it easy to trace any Python LLM application. In this quickstart we also use the Langfuse OpenAI integration to automatically capture all model parameters.

[!TIP] Not using OpenAI? Visit our documentation to learn how to log other models and frameworks.

pip install langfuse openai

LANGFUSE_SECRET_KEY="sk-lf-..."

LANGFUSE_PUBLIC_KEY="pk-lf-..."

LANGFUSE_HOST="https://cloud.langfuse.com" # 🇪🇺 EU region

# LANGFUSE_HOST="https://us.cloud.langfuse.com" # 🇺🇸 US region

from langfuse.decorators import observe

from langfuse.openai import openai # OpenAI integration

@observe()

def story():

return openai.chat.completions.create(

model="gpt-4o",

messages=[{"role": "user", "content": "What is Langfuse?"}],

).choices[0].message.content

@observe()

def main():

return story()

main()

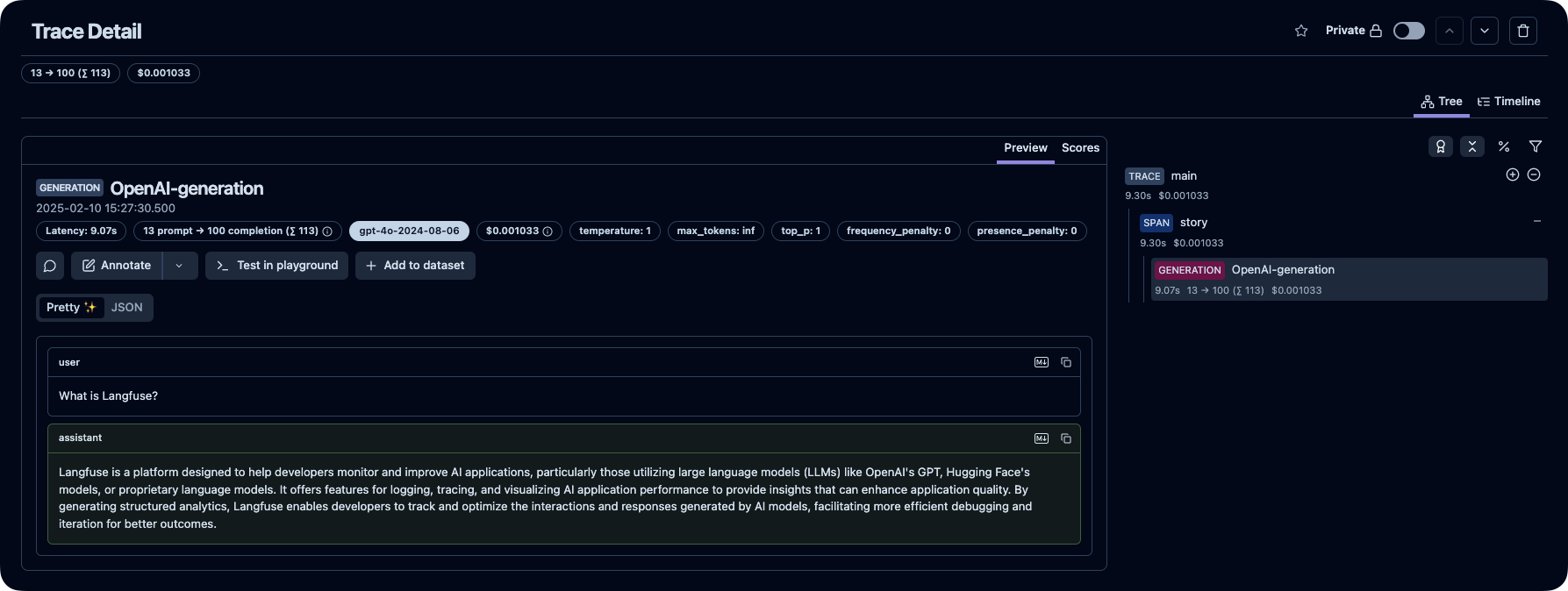

3️⃣ See traces in Langfuse

See your language model calls and other application logic in Langfuse.

Public example trace in Langfuse

[!TIP]

Learn more about tracing in Langfuse or play with the interactive demo.

⭐️ Star Us

💭 Support

Finding an answer to your question:

- Our documentation is the best place to start looking for answers. It is comprehensive, and we invest significant time into maintaining it. You can also suggest edits to the docs via GitHub.

- Langfuse FAQs where the most common questions are answered.

- Use "Ask AI" to get instant answers to your questions.

Support Channels:

- Ask any question in our public Q&A on GitHub Discussions. Please include as much detail as possible (e.g. code snippets, screenshots, background information) to help us understand your question.

- Request a feature on GitHub Discussions.

- Report a Bug on GitHub Issues.

- For time-sensitive queries, ping us via the in-app chat widget.

🤝 Contributing

Your contributions are welcome!

- Vote on Ideas in GitHub Discussions.

- Raise and comment on Issues.

- Open a PR - see CONTRIBUTING.md for details on how to setup a development environment.

🥇 License

This repository is MIT licensed, except for the ee folders. See LICENSE and docs for more details.

⭐️ Star History

❤️ Open Source Projects Using Langfuse

Top open-source Python projects that use Langfuse, ranked by stars (Source):

🔒 Security & Privacy

We take data security and privacy seriously. Please refer to our Security and Privacy page for more information.

Telemetry

By default, Langfuse automatically reports basic usage statistics of self-hosted instances to a centralized server (PostHog).

This helps us to:

- Understand how Langfuse is used and improve the most relevant features.

- Track overall usage for internal and external (e.g. fundraising) reporting.

None of the data is shared with third parties and does not include any sensitive information. We want to be super transparent about this and you can find the exact data we collect here.

You can opt-out by setting TELEMETRY_ENABLED=false.